Pytest with Python

Pytest provides a structured way for creating test cases with Python, using pytest we can pinpoint which functionalities are failing by creating a test for each functionality. We can also selectively run a test or fail test, run tests in parallel, and perform many more tasks.

The added advantage is, that we can install packages that can enhance pytest test cases and results, these packages are developed using hooks and fixtures of pytest. With pytest, you can arrange tests into different categories like sanity, regression, or other.

This is a long article, that will cover end to end of pytest

Make sure a test is not dependent on other test, complete isolation is required for better testing

Installation:

pip install pytestTest Discovery with pytest:

Tests are like functions/methods but just vary in the name, and where the function is present, Below are a few points to remember while creating tests. (The underscores are optional, I added them for readability purposes)

- The function name must start with the word test, ex :

test_sum - The class name must start with the word Test, ex:

TestMath - It is not mandatory to put a test case inside a class

- The filing must start with the word test, ex:

test+mathematics.py

What happens when the test is not prefixed to a function?

If a function is not prefixed with a test then that function will be considered as a python function and it will not be executed by pytest.

Running Pytest:

Create a file called test_sample.py and create the below piece of code in that.

def test_say_hello():

print("Hello")

def welcome():

print("welcome")Execute the following command in the terminal:

pytest -vvv #-vvv for detailed log

# output

=================== test session starts ===========

collected 1 item

test_sample.py::test_say_hello PASSED [100%]

=================== 1 passed in 0.01s ==============If you notice the above output, you can see only the function with the “test” prefix is executed not the other function. You do not see any output printed, we will learn out to print the output shortly.

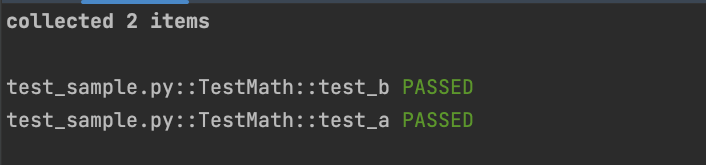

Ordering/Sequencing test

You can order your test, ordering a test is nothing but setting the sequence of execution with pytest.

For this use a package called pytest-order, install pip install pytest-order

import pytest

@pytest.mark.math

class TestMath:

@pytest.mark.order(2)

def test_a(self):

assert False

@pytest.mark.order(1)

def test_b(self):

pass

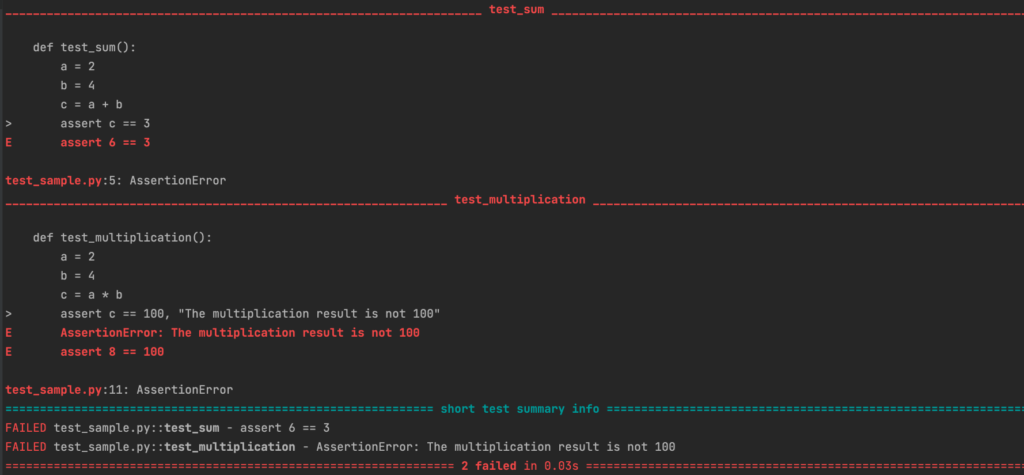

Assertions in pytest:

In the above function, we have created just a print statement as part of the function. while creating test cases for functionalities we will be checking whether something matches our criteria or not if it does not match our criteria then we will fail the test case.

We use assertion to check whether our criteria are met. We can use the assert keyword from Python for checking.

Add the below code into the test file and run pytest on the terminal, both tests will fail but one with the default reason and the other one with user mentioned reason. So with assert, we can provide the reason why a particular test failed.

def test_sum():

a = 2

b = 4

c = a + b

assert c == 3

def test_multiplication():

a = 2

b = 4

c = a * b

assert c == 100, "The multiplication result is not 100"

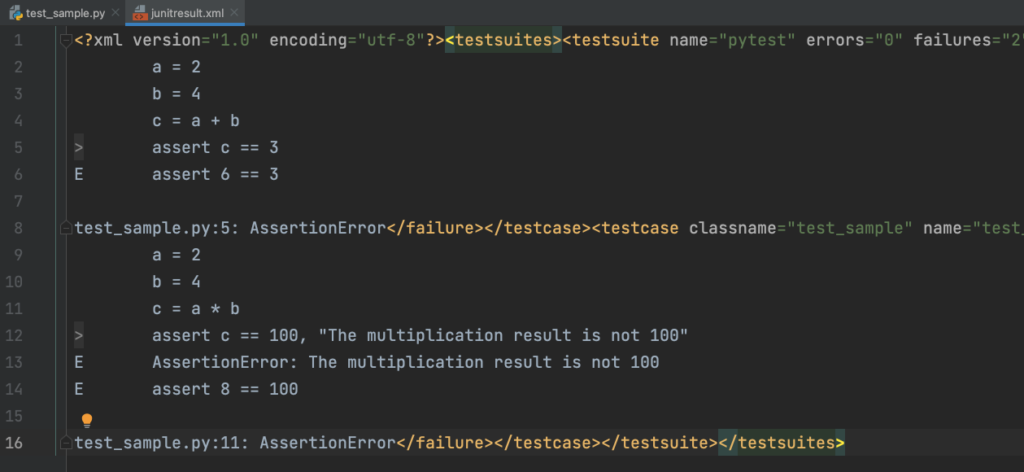

Report:

So far we are seeing the result in our output screen, but in actuality this result might need to be shared with the team lead or with other peers, So in such cases, the console output is not enough.

We can generate reports in multiple ways 1. using (double – ) —junit-xml other with HTML result. Junit reports are widely accepted formats for generating further better reports, useful if you are using Jenkins.

Run the below command in the terminal to get junit xml type result.

pytest --junitxml junitresult.xml

# junitresult.xml is the filename, where the result should be stored

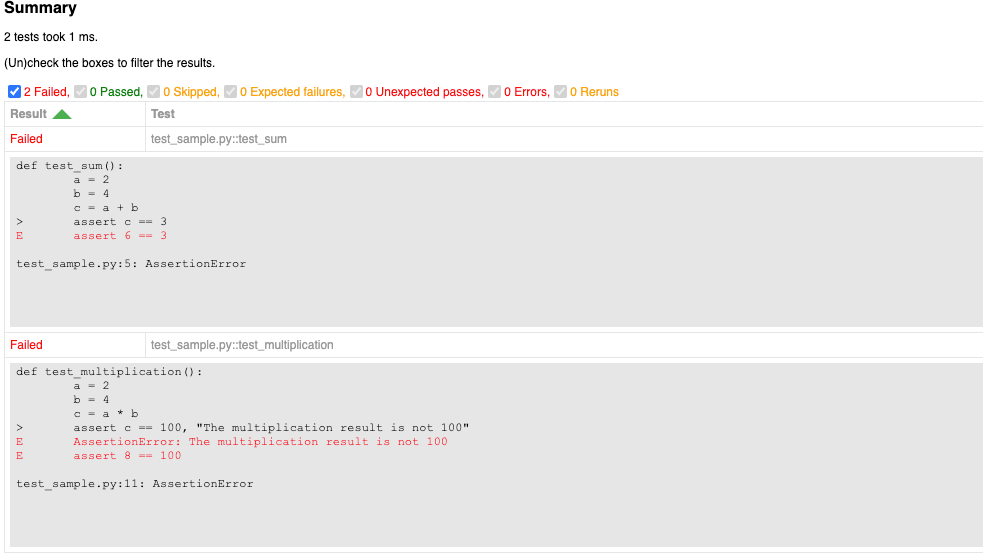

HTML report

For generating an HTML report we need to install a package, pip install pytest-html

Run the below command in the terminal, in the output you can notice the report path “Generated html report: file:///path/report.html”

pytest --html=report.html

# output

------ Generated html report: file:///path/pytest_examples/report.html ------

===== short test summary info =====

FAILED test_sample.py::test_sum - assert 6 == 3

FAILED test_sample.py::test_multiplication - AssertionError: The multiplication result is not 100

===== 2 failed in 0.02s =====

Collect only or dry run

If you want to check which tests will be executed without running actual tests, we can use the --collect-only parameter with pytest. This will be useful when you want to see why your test is not picked by the pytest.

pytest --collect-only

====== test session starts ========

collected 2 items

<Module test_sample.py>

<Function test_sum>

<Function test_multiplication>

====== 2 tests collected in 0.00s =Printing to screen with Pytest:

Normally when we execute the pytest, the print statements will not be part of our output. But if you using pytest for just learning or debugging then you might want the print statement to be part of the output.

We have to provide an argument -s along with pytest to print to the screen

pytest -s

test_sample.py result sum is 6

Fresult multi is 8

FExecute a specific test, class, file

Let’s rewrite the code to have a class but keep the test in the same file

class TestMath:

def test_sum(self):

a, b = 2, 4

c = a + b

print(f"result sum is {c}")

assert c == 3

def test_multiplication(self):

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"We can use the -k argument to run a specific test or a class or file.

pytest -k test_sum

# output

collected 2 items / 1 deselected / 1 selected

# pytest -k "test_sum or test_multiplication"

#running class

pytest -k TestMath

# output

collected 2 items

#running file

pytest -k test_sample.py

# output

collected 2 itemsYou can use -k to avoid certain functions, classes, and files.

pytest -k "not test_sum". # test

pytest -k "not TestMath". # class

pytest -k "not test_sample.py" # fileYou can make combinations as well using and, not

pytest -k "test_multiplication and not test_sum"Categorization of Tests, Tags or Markers

When you create a test framework, you need to segregate tests according to their priority and functionality.

- To categorize tests we will be using markers (also called tags)

@pytest.mark.marker_name, - Each test can be categorized

- Each Test class can be categorized

- Multiple markers can be associated with tests and class

import pytest

@pytest.mark.math

class TestMath:

@pytest.mark.sum

def test_sum(self):

a, b = 2, 4

c = a + b

print(f"result sum is {c}")

assert c == 3

@pytest.mark.multi

@pytest.mark.xyz

def test_multiplication(self):

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"Running Tags

We can use -m argument followed by a marker name to execute specific markers

pytest -m 'sum'

# output

collected 2 items / 1 deselected / 1 selected Parallels running with pytest

To save time we can run or test in parallel mode, this will save our time. One thing to remember, make sure your tests are not dependent on each other. The number of parallel threads depends on your system hardware configuration.

Install python package : pip install pytest-xdist

Then run the tests using -n argument followed by the number of parallels, if you are not sure whether your computer can handle a given number of parallels then use auto as value, auto will make sure, it opens as many as parallels your machine can handle

pytest -n 2

# output

2 workers [2 items] # items here are tests

pytest -n auto

# output

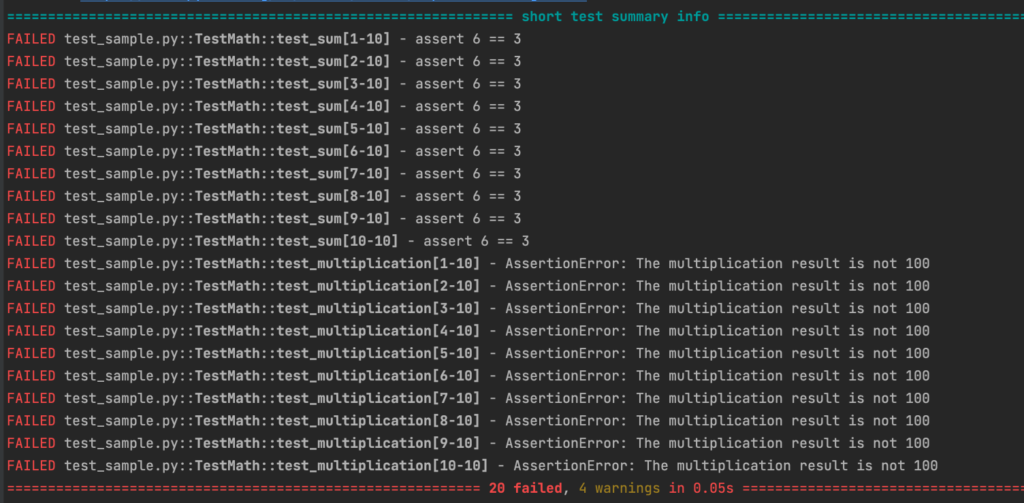

8 workers [2 items] Repeat with counts

When I work on test creation, I try to make sure the test runs fine before I push it into the repository. Personally, I execute at least 5 times to see if it can pass all 5 times. But doing this manually is kind of boring.

We can use a package called pytest-repeat, install package : pip install pytest-repeat

We have to pass an argument –count followed by a number, the number denoting how many times this test has to run.

pytest --count 10

# there is 2 test, so total tests are 2 *10 = 20

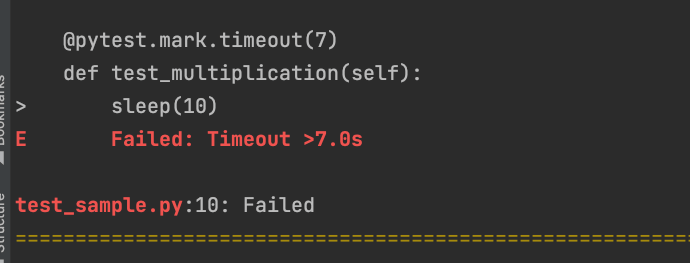

Timeout

Some tests may take time but if you want to fail/limit test if it takes more time, then we can use a package pytest-timeout, install pip install pytest-timeout.

pytest --timeout=5If you want to assign for each test case then you add a marker

@pytest.mark.timeout(7)

def test_multiplication(self):

sleep(10)

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"Output

Dependency

You can write your test case to depend on other test cases, use the package pytest-depends, install pip install pytest-depends

I personally avoid writing test cases with dependency.

Note: pytest-dependency is another package same purpose, but it did not work out

import pytest

@pytest.mark.math

class TestMath:

# @pytest.mark.xfail(reason="deliberate fail")

def test_a(self):

assert False

def test_b(self):

pass

@pytest.mark.depends(on=["test_a"])

def test_c(self):

pass

@pytest.mark.depends(on=["test_b", "test_c"])

def test_e(self):

passcollected 4 items

test_sample.py::TestMath::test_a FAILED [ 25%]

test_sample.py::TestMath::test_b PASSED [ 50%]

test_sample.py::TestMath::test_c SKIPPED (test_sample.py::TestMath::test_c depends on test_sample.py::TestMath::test_a) [ 75%]

test_sample.py::TestMath::test_e SKIPPED (test_sample.py::TestMath::test_e depends on test_sample.py::TestMath::test_c) [100%]Expected Failures:

There will be times when you know some test will fail (maybe because of an existing bug) but you do not want this to be part of the reports as failed, in such cases, you can use xfail(), which makes the test become xfailed than fail if it fails

import pytest

class TestMath:

@pytest.mark.xfail()

def test_multiplication(self):

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"Skipping tests:

If you want to skip a test, you can use skip marker.

class TestMath:

def test_sum(self):

a, b = 2, 4

c = a + b

print(f"result sum is {c}")

assert c == 3

@pytest.mark.skip("skip this test, I am the reason")

def test_multiplication(self):

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"

pytest -v

# output

collected 2 items

test_sample.py::TestMath::test_sum FAILED [ 50%]

test_sample.py::TestMath::test_multiplication SKIPPED (skip this test, I am the reason) [100%]

You can skip a test based on a criteria, for this using skipif marker

import pytest

class TestMath:

def test_sum(self):

a, b = 2, 4

c = a + b

print(f"result sum is {c}")

assert c == 3

@pytest.mark.skipif(3 > 6, reason="skipping if criteria matches")

def test_multiplication(self):

a, b = 2, 4

c = a * b

print(f"result multi is {c}")

assert c == 100, "The multiplication result is not 100"pytest -v

# output

collected 2 items

test_sample.py::TestMath::test_sum FAILED [ 50%]

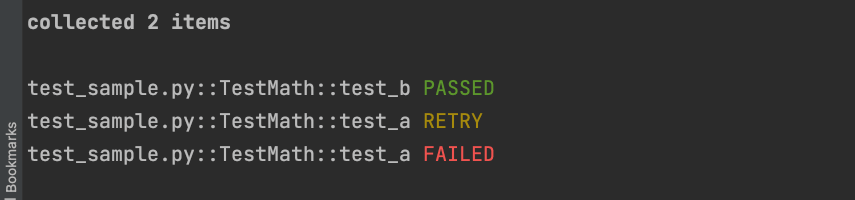

test_sample.py::TestMath::test_multiplication FAILED [100%]Retry failed tests

You can run the failed tests again withing same execution using pytest-retry package, install pip install pytest-retry

import pytest

@pytest.mark.math

class TestMath:

@pytest.mark.order(2)

def test_a(self):

assert False

@pytest.mark.order(1)

def test_b(self):

passRun command:

pytest -v --retries 10In the below screenshot, you can see only one retry count, but in reality, it tried 10 times (I did not include it due to the length of the trace).